Better Networks and Training

I've made some pretty substantial changes since the last post. I modified my code to more closely follow the Izhikevich paper. I rewrote everything in Julia partly to learn the language and partly because it's faster than Python. I actually evolved a network that could classify the iris dataset with 90% accuracy. I'm working to bring that up as well as to have a more rigorous training regime analogous to back propagation but for spiking neural networks. In this post I will explain the changes from the previous SNN code as well as the evolution code used to train this network.

The first important change is how the neurons communicated to each other. I tried using a current input as I thought that would be more physical and I knew it would be needed for the recreation part of this project. The Izhikevich paper uses constant voltage offsets after each spike. I'm not convinced the current method wouldn't work but I figured since it wasn't training well, I should go back to the faithful recreation so I didn't beat my head against a wall only for that to be the solution much later. Additionally, the differential equation for updating voltage is non linear for voltage but linear for current. This may mean voltage changes implicitly contain more information even if the current changes would result in a voltage change on the next time step.

EDIT: The paper absolutely does not update voltage directly after each spike, it has a noisy current input as well and an additional current addition from the synaptic weight after a pre-synaptic spike. I really don't know why I changed it or where I got the idea. In any case, I'm redoing this and working on STDP still. I hope I have not made any other grievous errors in the neuron implementation.

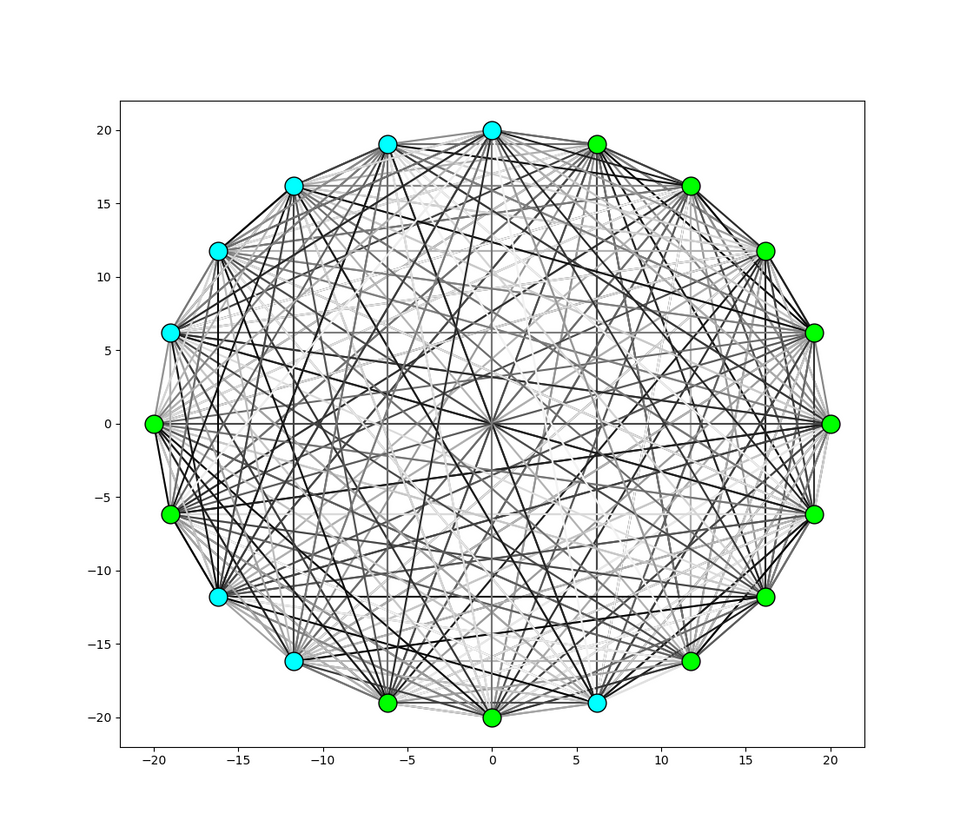

How the neurons were connected has also been substantially changed. They were formerly almost in a feed forward pattern. That has all been thrown out. As it stands, there is no physical structure informing which neurons can connect where. There is a matrix representing the weights where almost any neuron can stimulate almost any other. There are a few exceptions. No self stimulation is the biggest, no neuron can just continuously cause itself to spike. Loops are allowed though, so two neurons could stimulate each other very strongly causing something similar. I have tried to impose other conditions (no stimulation of input neurons and sparse matrices so not every neuron can effect all others) however the currently well trained network does not impose these. More entries just means more tools for evolution to act on so imposing extra conditions always makes training harder.

Those are the major changes. I probably accidentally adjusted some constants when converting from Python to Julia but I don't think I changed anything substantial. Let's get into the evolution. Well the good thing about evolution as a learning algorithm is that it's perhaps the easiest to explain. I scored networks on accuracy from a randomly selected set of iris data and selected those that beat the average score to reproduce. They reproduced by randomly selecting entries from the weight matrix of two randomly selected winners. Mutations were introduced then the scoring was run again. This repeated about 1000 times until the final network popped out. If this sounds easy, it's because I left the part out about how scoring was done.

Scoring the neural networks seems easy but is actually somewhat complicated and a poor scoring function can result in unexpected behavior. Usually, networks would evolve to just guess one of the 3 classes and that would technically be an optimal solution in the sense that any mutations would perform worse. Doing something like counting how many right answers a network gives seems like a sensible idea but it falls prey to that failure. It also fails to give any kind of partial credit. The classification works by saying the network "picked" whatever output node had the most spikes. Imagine one network outputs (0, 100, 0), another outputs (0, 100, 75) where the right choice is the 3rd option. Clearly the second network was closer but both were still wrong. Of course trying to impliment something like mean square error has its own problems. I would get networks outputing (99, 100, 98) or something similar shuffled around. This gets point for having a high score in the correct class, but is still just guessing at random. The method that ended up working was scoring on both MSE and the number of correct guesses. Of course, tuning these so the loopholes weren't the optimal strategy still took some time. If you want details I have code linked here.

I am currently trying to implement some better learning rules, mostly spike timing dependent plasticity because it's very biologically plausible and makes use of the spiking nature of the network directly. I don't feel qualified to talk about it more in depth or even recommend reading as my code using it has not yet given good results. Training SNNs directly was never the purpose of this project but I would still like to understand it. I could move on to the recreation phase but that does not thrill me. Sense the matrix is so dense there are effectively 380 synapses each with a weight to recreate and that's an order of magnitude more complex than what I did for the ANN project. Maybe it can't be helped. Next post will either be about better training or about recreation.