Toward Tuning Curves (Rubber Ducking Again)

Well the last thing I did was actually kind of a success in the sense that it 1) did learn to reliable differentiate between horizontal and vertical patterns and 2) showed that that rule can't account for all learning in the brain because it fails to replicate LTP and LTD. Now I want something that can both learn useful things and recreate the incredibly simple dynamics of LTP and LTD but before that I feel like I should do some more work from where I just was, maybe fixing up the learning rule a little or expanding the architecture will give me these. So what is this exactly? Well, while the last article did get that selectivity between horizontal and vertical patterns, it did not fully generalize. What do I mean by this? Well if the spatial frequency had an offset it would totally fail. What's more, when I trained the model on data with spatial offsets, it failed to have an selectivity. This isn't actually a huge surprise. Originally, the synaptic weights developed in such a way that if a given pattern was strong in certain areas and weak in others it could differentiate them. But one with an offset could be strong anywhere for any frequency for any orientation. So what we need is a comparator to say the relative strength of this pattern is different in the x-axis but not in the y-axis or something. I don't think single neurons can act as comparators in this way. At least, not the ones I have implemented. See this is working already. Ok while ago I read about neurons that were resonators rather than integrators. That is, they responded to different frequencies rather than total input strength. You can imagine a simple case of a neuron of this type with two inputs. If one input is firing at the resonant frequency of this neuron and the other input is silent then the neuron will fire. If both input are firing at resonant frequency, unless they are perfectly in sync, the input will practically be at 2x the resonant frequency and the neuron may not fire. If both are silent then of course the neuron will not fire. This is in some sense an XOR operation which is famously difficult for traditional machine learning to learn. Like I think single perceptrons can't and that was a sticking point for a while. Whether that is true or not I don't really care, I'm going to see if I can make an XOR neuron using my IZH model. Oh other main idea currently is to give it the lateral inhibition I was using for the MNIST stuff or just another layer, not sure how that will go. Oh here is another good option, sprinkle in some inhibitory neurons that lateral inhibit. So this is kind of the same as the previous lateral inhibition but is different because these inhibitory neurons would be directlty driven via the input, that would be cool right? Could give rise to XOR behavior. If two input neurons are active it could stimulate an inhibitory neuron sufficiently to have it fire and inhibit a neuron that also is taking in both inputs. If only one input neuron is firing it could be sufficient to stimulate a neuron to fire but not enough to fire the inhibitory neuron. I hope that makes sense.

Ok, so I've tried this with the inhibitory neurons. They are stimulated by the output neurons and then this feeds back to inhibit the output neurons. Hope that makes sense, I'll add a picture of the synapses and that will hopefully clear it up. What I have found is that adding these still allows the model to learn fixed patterns and does roughly nothing to learn the patterns with an offset so that sucks. Kind of unrelated but I found this cool paper. Well it wasn't me, it was actually a coworker so thanks Max! Actually it kind of pisses me off because this is exactly the kind of thing I've been looking for for a while, even going so far as to ask on LessWrong and in a private discord with some like minded people. Turns out that apparently if you go to google and search for 'synaptic learning rules in spiking neural networks' you get nothing good but if you do it on edge you mysteriously get good results if your name is Max. Who knew. I should be glad but it really does feel like I wasted a lot of time not seeing this. Even if that particular paper turns out to be useless (it looks promising but I am unsure if it will generalize even to my particular problem of offsets, let alone all the other learning tasks I am curious about) it just speaks to a general inability for me to effectively find relevant papers/labs which I need to do both for my own learning and also as I wind up looking for grad school stuff. Bottom line, just adding inhibitory neurons does not magically make the model learn generalized patterns and I'm not 100% sure what my next steps are going to be because the resonator neurons working seems like long shot and also IZH resonators didn't actually have that selective of a frequency input in my initial testing.

Just uh throwing science at the wall and seeing what sticks, I'm going to try and have the output neurons excite each other. I don't know if this will work but it sure would be cool. Everything has worked in the case of static spatial frequency inputs. All I want is for it to generalize if the input is in another place. Is that so hard?

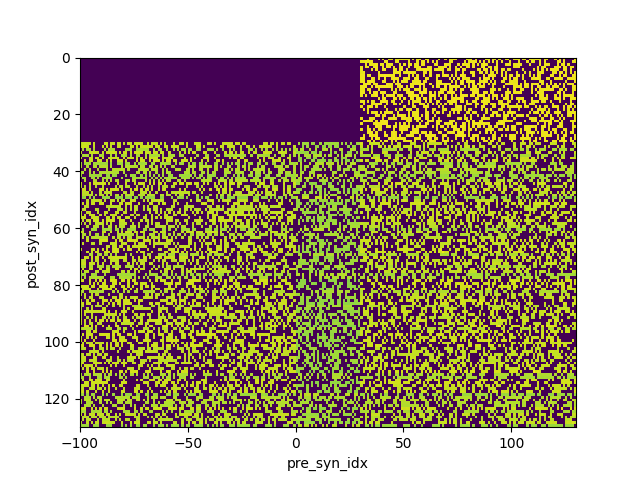

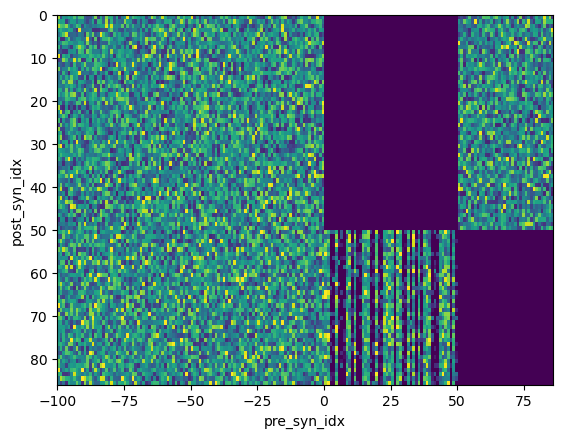

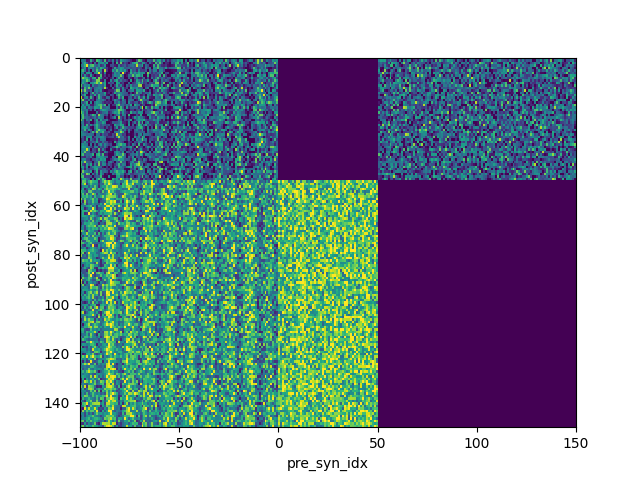

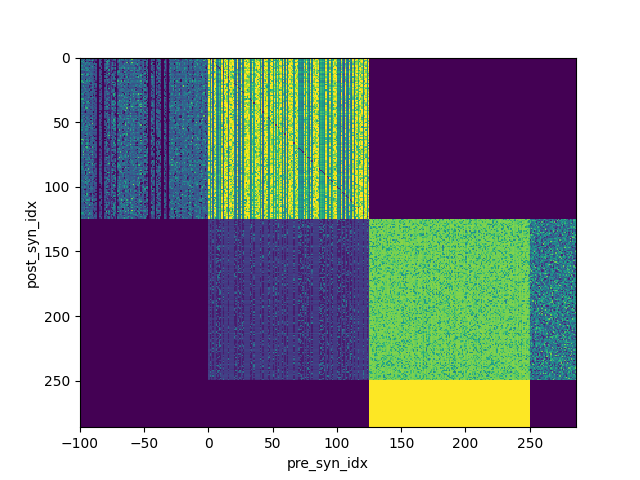

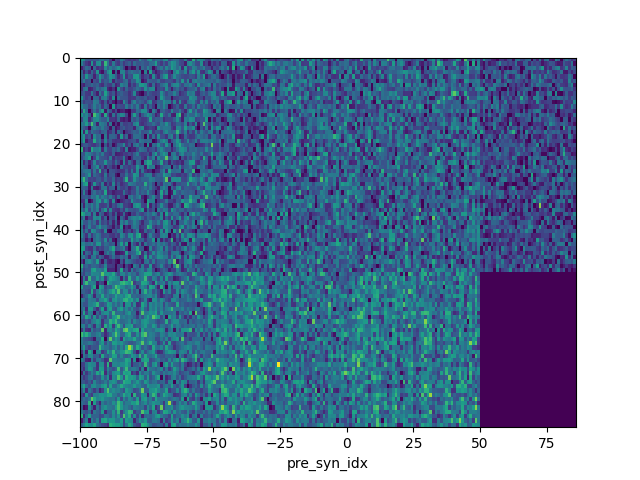

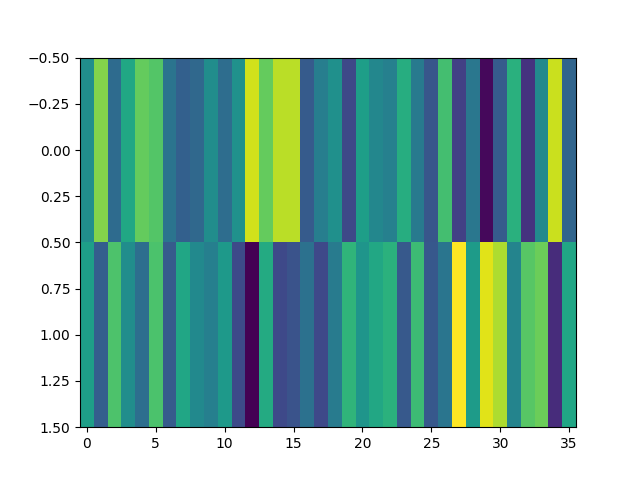

So I trained this as described, an almost densely connected network. The synapses look like this post training:

Interestingly, it is anti-accurate. It gets ~44% of horizontal vs vertical orientation questions correct when the offset is present. That is when testing 1000 samples. I think this is just on the ragged edge of statistical significance. I'm not doing math but I'm going to turn off the training signal and see how those weights evolve and then see if there is a real obvious difference in firing pattern between certain neurons for certain patterns.

First of all, that didn't work. What is much more interesting is that the network still displays high selectivity of vertical vs horizontal patterns despite no training signal provided there is no offset. By manually tuning the offset I could get the network to be right ~90% of the time or wrong ~90%. So it can still distinguish them without the training signal but the offset is what's really messing it up. I don't know what to do with that information, it's just interesting I guess. Well the easiest thing to implement would be just have a true hidden layer so I guess I'll do that. The way I'm actually going to do this is to just randomly change some fraction of what are currently inhibitory neurons to being excitatory. This is the easiest code wise but not very good nomenclature.

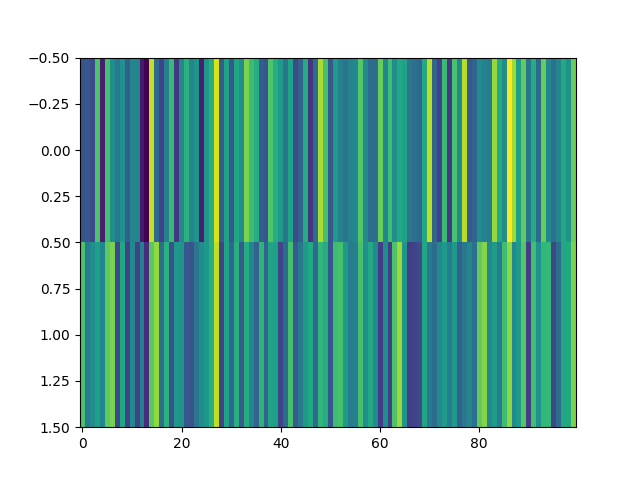

On first blush this didn't really work, my normal testing code showed very little selectivity but when I break it down by individual neurons it actually shows some promise.

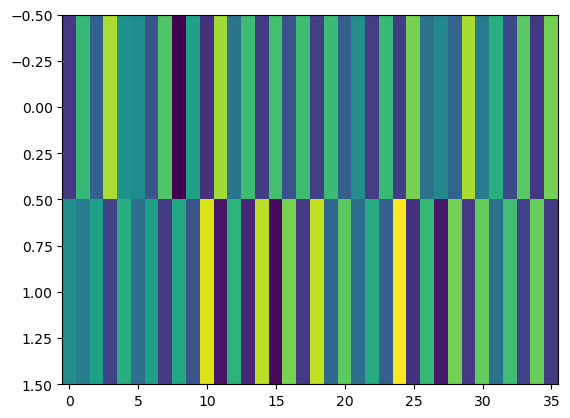

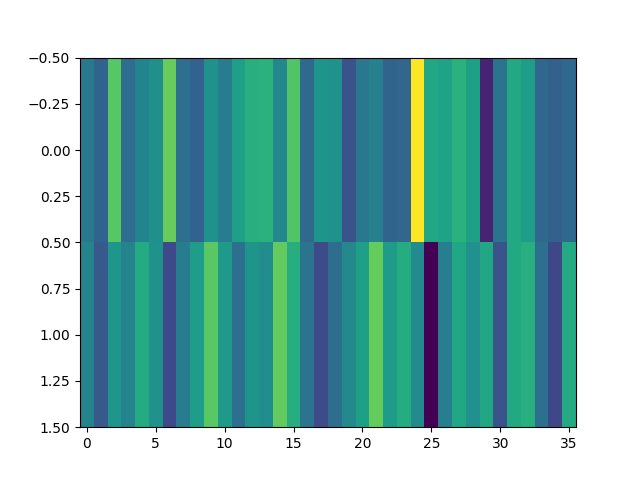

The top row is for horizontal and the bottom for vertical. Or vice versa, I don't actually know and it is kind of arbitrary. Man, I should really label my axes and stuff. Ok so x-axis is the output neuron index and the color corresponds to the firing rate. On average there isn't much but certain neurons do show a big difference in firing rate between vertical and horizontal which is what I want. It is quite late at night. I am going to push this code and hopefully pick it up in the morning.

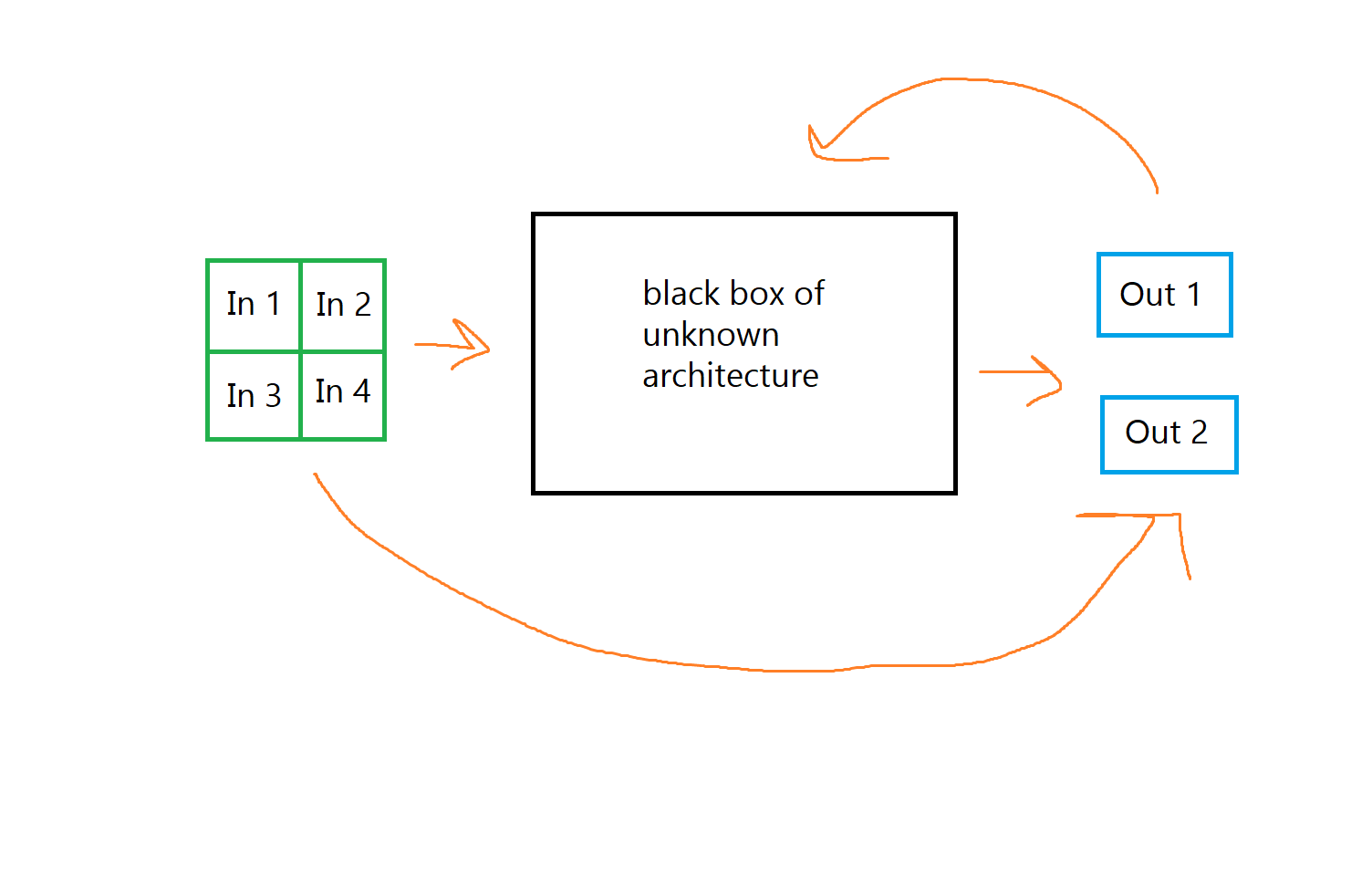

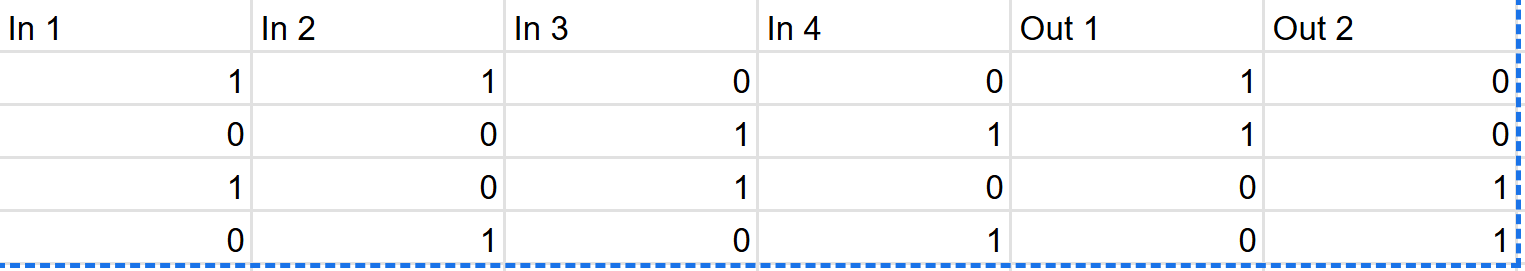

I think I'm going to massively simplify the system again. I can get away with a mere 2x2 input array and 2 ouput neurons. This can still represent vertical or horixontal lines in multple positions. Maybe it will automatically and easily learn these patterns and I'll be able to scale up until I find some critical disconnet. Maybe it won't and I'll have a smaller faster system to debug.

Formally, I can put this in a logic table like so:

Now, obviously it would be trivially easy to build something with digital logic to do with operation. I could make a much more direct competition between the two outputs, I guess I technically didn't draw that in the highly technical figure. I've essentially done this already by having the output neurons project on inhibitory ones but still. This is really starting to remind me of that bistable thing I worked on so long ago. It had two excitatory regions and one mutual inhibition region. I wonder if that would work for this? Of course that had to be kind of meticulously tuned to get the bistability and didn't really generalize to a large number of classes you want to select for, each region could only indicate one class where a more efficient encoding might have one big hidden region and only a few neurons per class. Still, that might be relevant.

Well that was a waste of time, making the network that small stripped out any learning ability. Cool.

So I just ran the bog standard code once again to see it classifying sans offset and something very intriguing happened. It was highly anticorrelated despite the training mask being present. I don't know what to make of this. I am going to run without the mask to see if it is similarly selective.

It's like 70% selective sans training signal so that's cool. Maybe with longer training run it would get higher. I could definitely cheese it by intentionally selecting the best cells for each class. When I finally get this to generalize I'll see what I can do because unsupervised learning without even a reward signal is pretty cool.

I think the most logical thing to do is to start having a properly different rule for the inhibitory neurons. I clearly need to shake up the learning rules in general. Two good options are:

- Take spike based learning rules from that paper I said Max found

- Rules/rough inspiration from this

I don't really like that second one so much because it has a different homeostatic mechanism. I mean I can have mine and its (multiplicative scaling of pre-synapses I think), but the exponential decay I have now seems so natural. I don't really like the first one because I'm still jealous I didn't find it but also because they didn't test theirs to generalize to offset patterns and I'm worried it will be a huge time sink. Still, I have to try something.

So, it is actually saying in 2 that subtractive synaptic scaling is better so maybe instead of having a individual synapse decay, I have a decay for each neuron or a decay globally even. Yeah I like this idea. I don't have a good theoretical reason to think it would lead to the network generalizing to recognize the offset but it is really easy to try and it's different. They also talk of synaptic redistribution. I really don't understand that but that means it definitely isn't in the current model. I think it is a probabilistic model of spiking transmission. So if the pre-synaptic neuron fires there is only a chance that a spike is sent to the post-synaptic neuron. The long the pre-synaptic neuron goes with out firing the higher the probability until it saturates, similarly if the pre-synaptic neuron is highly active the probability decreases to some minimum. Really interesting, would be much more work to add, literally never heard of anyone else doing this and seems realistic but annoying as hell and I hope it isn't actually computationally relevant (like the min/max probabilities are just really close in most cells or something). I will implement the first of these ideas tomorrow I hope.

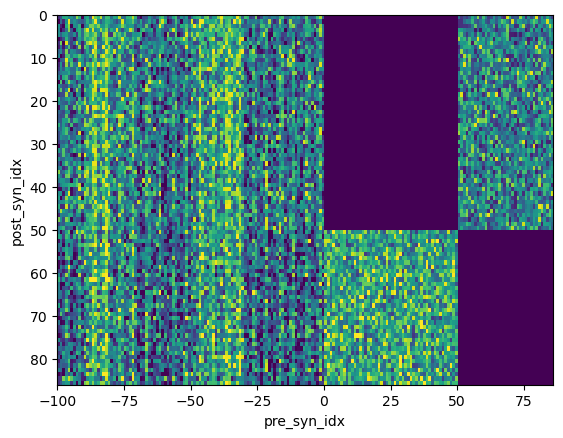

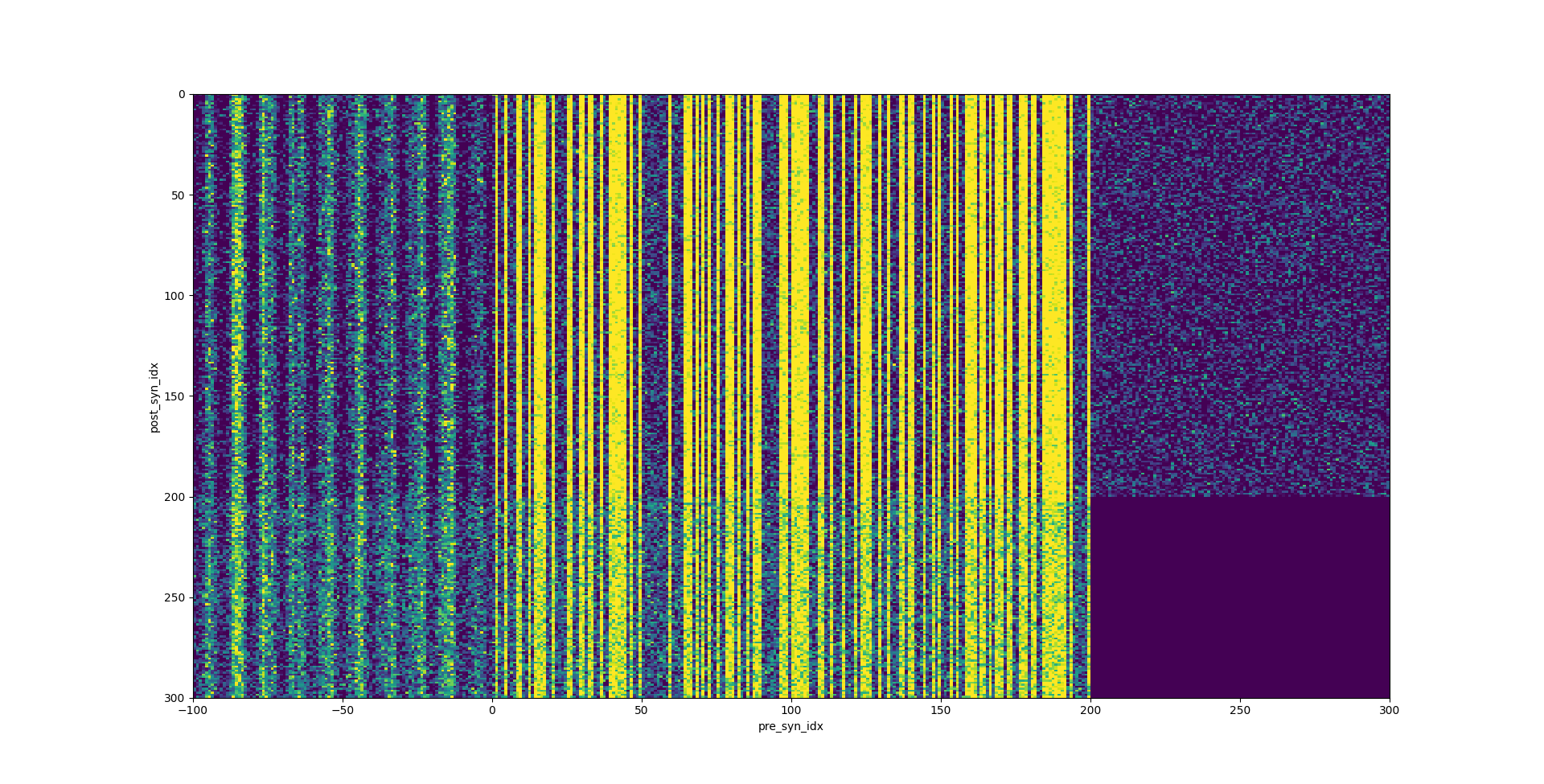

Did a modest amount of work so far today. I tried a different rule for the inhibitory neurons specifically. I think I tried 2 or 3 actually but currently I have them increase in proportion to the square of the firing rate of the post-syanaptc neuron. It doesn't help but it does stop them all from just trending to zero which I think was happening before. I also have it set to have that global decay. Honeslty, the globabl decay might be what is causing the inhibitory neurons to go to zero but I really don't care. See, this is what I get for writing something even a few hours after doing it, memory of a damn goldfish. Below is what synapses look like with global decay:

And here is what happens with the modified inhibitory rule, the one for the square of the post synaptic neuron activity:

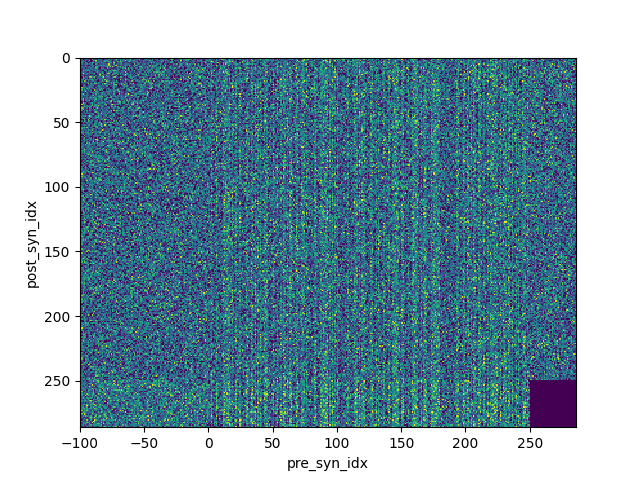

As you can see, there is some structure and the inhib neurons now actually have weights! This is the selectivity when there is no offset:

It's pretty great. After that I did it in unsupervidsed mode so no training mask. The results are here:

The actually accuracy is not great but the neurons still clearly have a big difference in firing rate so that's great. I'm not going to pursue this too much but it is cool to see competition arise naturally from these rules. Unfortunately, still not much progress in the whole offset front.

Current plan is to see if this can classify the small MNIST data set, if it can that's really cool and a more complex task than the horizontal vs vertical. Still doesn't address my current issue but hey, I'm out of ideas. Or at least, I have nothing to add until I have more time to really give it some STDP stuff. I should also see if this can learn across layers. That was one of my big frustrations with the old MNIST thing I was working on, adding hidden layers made it worse. I wonder if it will fail to learn just simple horizontal vs vertical with 2+ layers. That would make me very sad.

Well the MNIST thing looks cool but it isn't great. It's like 14% accurate I guess. I'm going to test it again with a larger sample size and try and get those single neuron firing rates based on the MNIST class. Yeah 14% accuracy is good given my sample size of 1000. Again, not great but something is happening. I am going to try and jack up the number of hidden neurons and see what happens. 50 isn't that huge all told. That is an overnight problem though so no more updates today. Astute readers might notice that the hidden layer does not project onto itself. I am going to change that as well just for the heck of it and see what happens.

So obviously that is very streaky so I might need to tune inhibitory neuron learning rule more but it got 40% accuracy just from the scale up so I'm going to increase hidden size up to say 600 and see what happens, it was at 200 for that run.

I tried 600 hidden and only got a lousy 17% accuracy :(

That was with only 1500 batch size I think so IDK I could bump that up.

I think the next steps are to

- Go back to working on the generalized tuning curve thing instead of wasting time with another MNIST project

- Change the inhibitory rule so it's more stable, it looks way too high here and that's what is causing the streaks

NGL I have not been taking sufficient notes, like I know what's going on because I am currently doing it but I need to be much clearer about my code going forward.

Currently trying to use that inhibitory rule on the horizontal/vertical classification with the offset present. It's the version where the increase in inhibitory weight is proportional to the firing rate of the post-synapse, not the square or anything. I really don't want to try and add that guys STDP rules from scratch only to find out they don't generalize to my problem (I really don't think they would because it has only static reference images) but who knows. There are no obvious paths to take right now and it is kind of frustrating.

Ok I'm going to decide that the next test is adding more hidden layers, even if they are grossly interconnected, and seeing if the learning rule generalizes to those/that fixes all my problems. The take aways from this post will then be

- Whether or not this learning rule can effectively recreate tuning curves of spatial frequencies at various angles with a constant offset

- If it can occur unsupervised (it can to some extent)

- Whether it generalizes to multilayer systems

Ok this is going to just serve as a little check point for everything before I wrap this up with the multi-layer thing. The current stat of affairs is as such:

- For excitatory pre-synaptic neurons, the weights are updated according tho a BCM rule

- For inhibitory pre-synaptic neurons, the weights are increased proportionally to the post-synaptic firing rate

- All synaptic weights decay according to the mean difference between the synapses and the default value, this encourages competition which is necessary for highly selective activity

- When in supervised training, neurons that are not in the region that are meant to be firing have their firing rate artificially halved, this decreases their weight more than if they were silent in accordance with the BCM rule

- When there is unsupervised learning (rules applied with no training signal), individual neurons still show pretty high selectivity but not quite as much as if there was a signal present

- So far, no rules/architectures have resulted in the ability to distinguish horizontal patterns from vertical patterns if those patterns can have a random offset

- Testing these horizontal vs vertical patterns with a constant offset will generally show very high selectivity after training with a random offset for each input, the problem is that which region preferentially responds to horizontal vs vertical patterns changes based on the offset

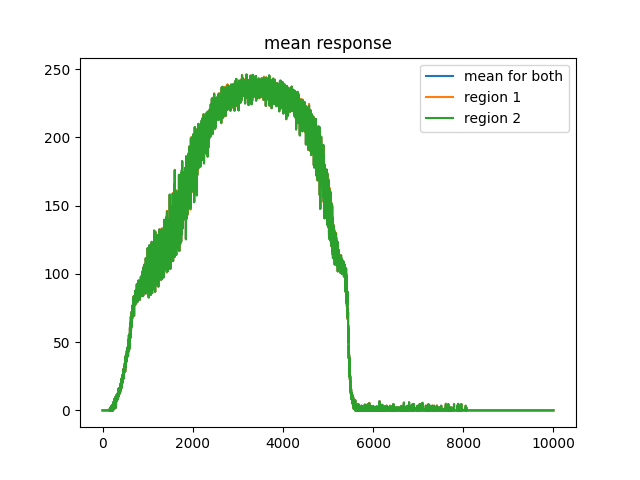

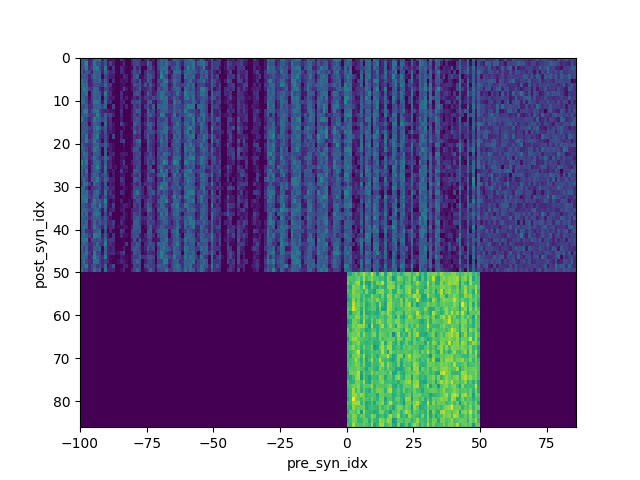

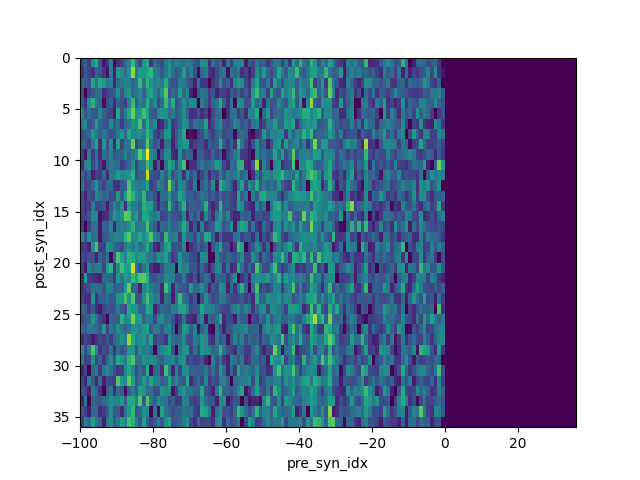

That is what the synaptic weights look like after 1500 simulated seconds, one input pattern per second. Here is the code to recreate everything.

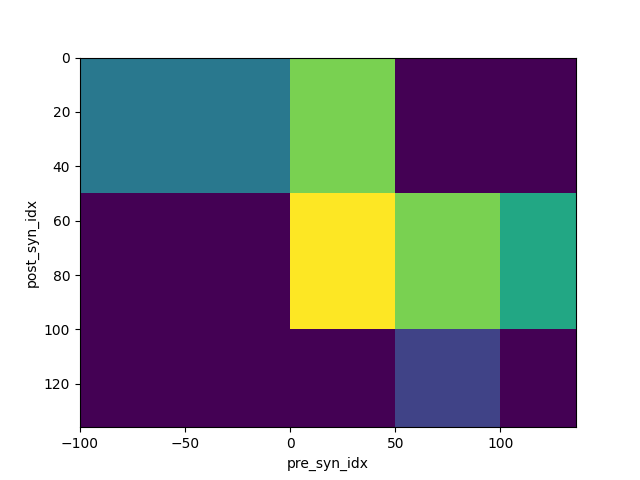

Ok! That is not quite the synaptic weight array thing, I'm going to explain it by color.

- dark purple is just 0, there is no connection between pre and post synapse

- lighter purple is the last hidden layer to the output neurons

- kind of teal color is the output neurons projecting back onto the last hidden layer for feedback

- green is the hidden neurons connecting within their respective layer

- yellow is the connection from the first hidden layer to the second

- and finally the blue, that is in the top left, is the input to the first hidden layer

This code is expandable to arbitrary number of hidden layers so if it shows any promise I might just bump it up to 3 or 4 and see what happens. Here is this version of the code.

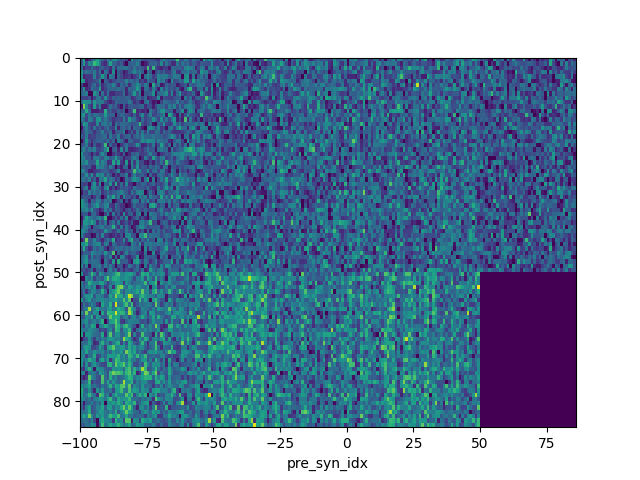

Hmm that really did not work, I think it is clear that I need to tun the inhibitory learning rule. Here are the synases:

I have worked on this too long, we need to wrap thiss up with a summary of the results so far so I can make a good choice on what to work on next

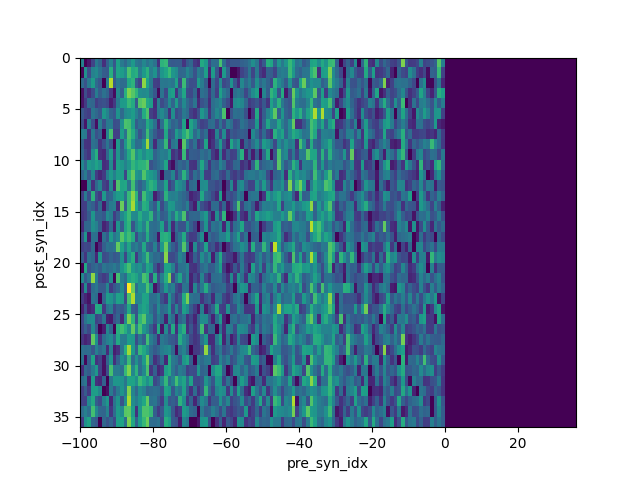

These are synapses of a network trained for 2000 trials with no offset in the training data and it had a training signal. It is literally 100% accurate on when no offset it present but it's still garbage when an offset is added. The synapses are relatively stable, they don't have a tendencies to trend off toward extrema at least not on this time scale.

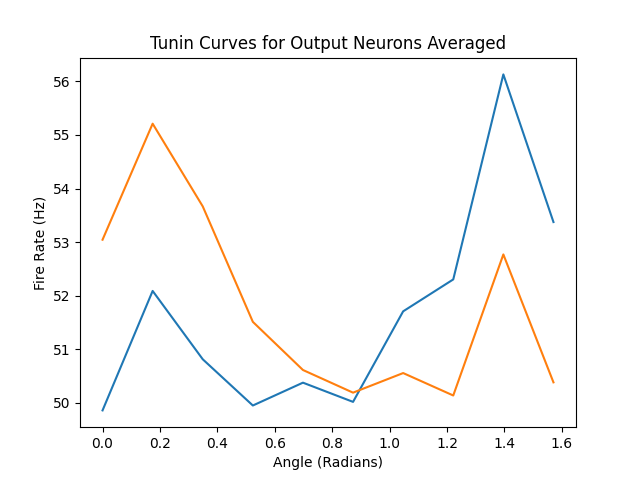

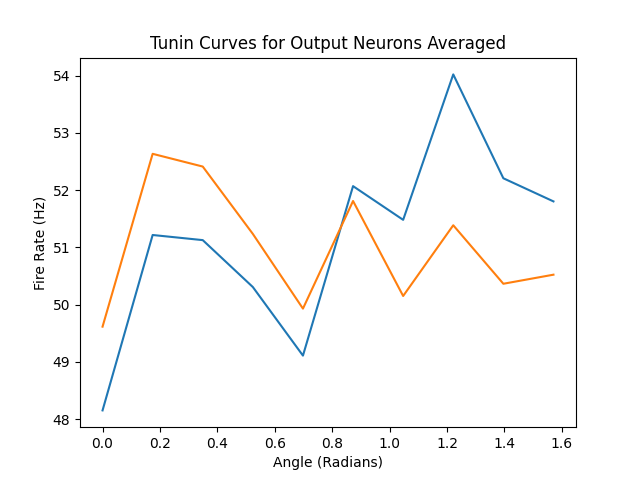

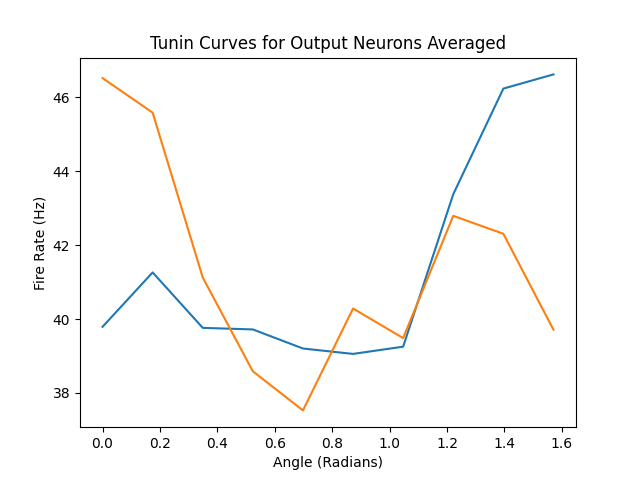

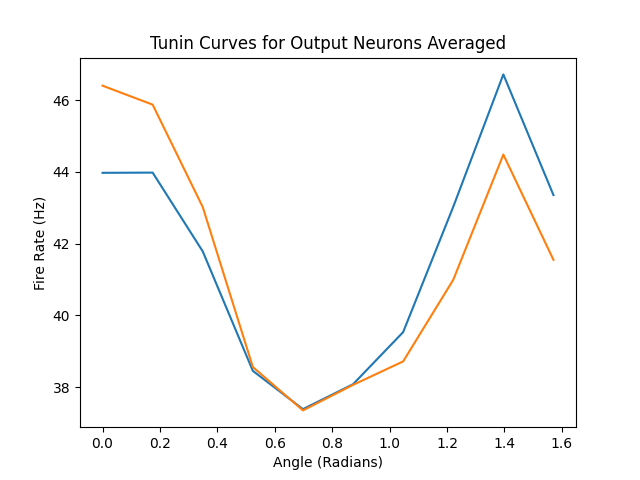

This is the tuning curve averaged for neurons belonging to each output subpopulation. There are very obvious peaks around the 0 and pi/2 angles which makes sense given the training. Interestingly, there are smaller peaks near those same points for the 'wrong' subpopulation. Also the absolute difference in firing rate is quite small. Making the firing rate difference larger and the smaller peaks disappear would be a marginal improvement but would be nice and could have knock on fixes if I'm lucky.

Here are those results for an unsupervised network, it attained 82% accuracy but that is without actually sorting the cells into categories based on how they respond. I don't know if I already described this but the test code assumes all n%2 == 0 neurons are in class 1 and n%2 == 1 neurons are in class 2 regardless of the actual tuning curve. This makes sense when you train with a signal enforcing that but it isn't fair in the unsupervised case. Anyway it's pretty cool that distinct tuning curves still arise without a training signal following the same rules albeit not nearly as sharp.

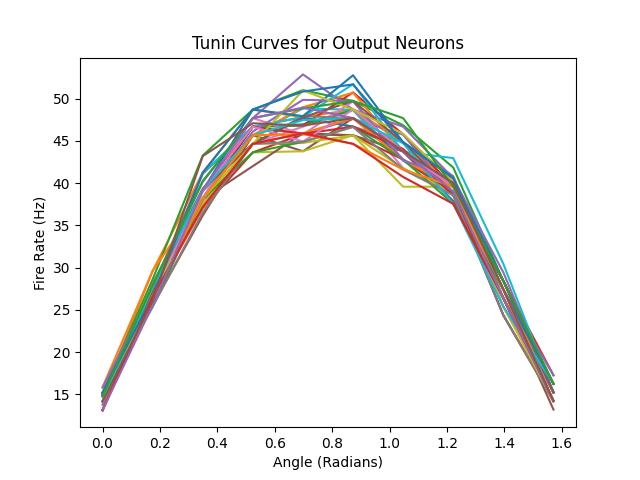

So I tested this with just a hidden layer, no direct connection from input to output. It performed extremely poorly, like 51% accuracy which is just chance. The tuning curve looks really interesting though, it peaks in the middle for all neurons.

I don't know if that means I can somehow tune the inhibitory learning rule or something to get this to actually work because in some sense all the neurons are really highly selective to angle, they're just selective in the same exactly way which is not useful for real learning. Getting a network working with multiple layers should be relatively high priority as it is both realistic and problem I have encountered before.

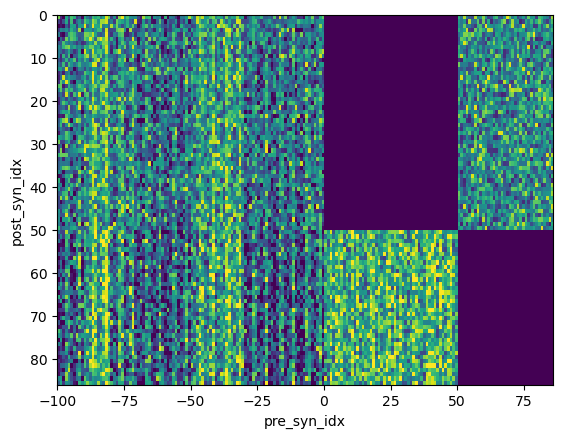

Last thing is looking at performance without a hidden layer present at all.

So what the hell is the point of the hidden layer? I honestly don't know at this point. When it is added it doesn't change accuracy and might make the tuning curve worse and when there isn't direct communication from the input to the output layer it doesn't work at all. Who has learning rule that can work for multilayer neural networks? Please I'm on my knees begging here.

Ok so I ran this one more time, unsupervised with no hidden layer. Here are the results:

And there we go, that's the answer I guess. In the case of unsupervised learning with no hidden layer you get much more similar tuning curves for all neurons vs a hidden layer that has inhibitory synapses. I guess the hidden layer isn't useless after all. I mean it is in a practical sense in this very limited model but what can you do.

Well honestly this seems kind of like a failure. I don't 100% know what I even want the immediate next steps to be. There needs to be some way to transmit information between distinct layers, I should find that. Maybe there just aren't any parts of the brain that don't connect directly? That seems incredibly unlikely actually but the connection density is definitely much higher than in most ANNs. We also didn't solve the main goal I originally set out to, that is getting tuning curves that react to a given spatial frequency horizontally but not vertically regardless of where the peaks fall, hat is with an arbitrary offset. I don't know why this is so hard honestly. I didn't get a change to test the very naturally generalizations of that either, those being different spatial frequencies in the same orientation, something that reacts to a certain spatial frequency but not others regardless of orientation, or spatial frequencies that also vary over time. Everytime I try and answer a question all I get are more questions. This just ain't right! I guess I did improve the inhibitory learning rule so that's neat. Still, I really wish I was making faster progress.