What I've Been Working* On

It has been some time. I have made honestly what I would consider only modest progress. I feel like I really haven't given the comp neuro the full attention it deserves but also that I hit really hard road blocks in the projects I've been working on which is tough. The short version is that I kind of replicated two papers on my lonesome. This isn't a huge accomplishment but I think I learned a fair bit, enough that it's worth sharing and the one project is going to get fleshed out in the next post hopefully. I was trying to do "actual research" on something and I thought replicating this paper would be kind of a side project that would give me a base to explore. It took way longer than I thought, is not as good as I hoped, but should be just good enough to do the thing I wanted so I'm calling it quits at least for the time being.

This was such a far spin off from my actual main goals project I haven't even really though about it since I realized it would probably not work but it is cool and it is something I did so I thought I should share. Plus it lets me explain a frustration I had so that's always a good reason to bring something up. Here's a fun question, what is actually happening in your brain when you make a decision? This first paper modeled it with certain populations of neurons are stimulated they then compete with each other and self stimulate to maintain persistent activity in one population that corresponds to some bit of information. They (and then I) implemented a bistable circuit out of neurons where an input could lead to randomly picking one of the two populations to be very dominant in activity. A targeted pulse to one of the two populations could select that population as the dominant one. My goal was to use this to make a classifier, the input being some data and the output being dominant, self reinforcing activity in one region. This didn't really work but I still think is a cool idea I may come back to but other architectures are probably better for a classifier anyway.

The other paper I replicated* was a spiking neural network with a slightly more refined architecture for image recognition with a supervised but still biologically plausible learning rule. I say replicated with a tremendous asterisk as I had to change a good deal about the paper to get even somewhat acceptable accuracy on the mnist digit dataset. I don't know exactly what I did wrong because I forced myself to not just copy their code but their learning rules did not yield good results in my experience. I did end up having some success with two different learning rules I kind of came up with myself but they were hardly novel and the accuracy still wasn't that great. The best I ever got was around 75%, so clearly it was learning something but not to the degree it should be for such an easy task. Combine the frustrating amount of changes with my highly unoptimized code taking forever to run before seeing results, I just don't have it in me to refine this particular part of the project anymore without working on something else first.

I will get into the details of both of these papers but first I would like to explain the overall motivation for working on these. I want to answer a few fundamental questions about error/noise tolerance in the brain. It is no secret that the brain is a very noisy environment. In fact, some of the work I recently started at my day job is quantifying just that. Additionally, scanning the brain is still extremely challenging. Getting a whole connectome is still a technical hurdle and extracting synaptic weights purely from morphology will be challenging if possible at all. To my knowledge, the OpenWorm project had to tune their weights considerably after the initial estimates of synaptic weight based on number of synapses between neurons. Whether or not that is how it particularly happened, it doesn't really matter. I like the idea of mind uploading both for model organism to do pure science and also for a lot of very optimistic sci-fi futures. But the question is, how accurate does the scan have to be to replicate all relevant behavior? How many connections can be missing outright? How much can weights deviate from their true values? How harmful are spurious connections between neurons? Answering these questions are essentially impossible in vivo, at least I couldn't practically or ethically do them even if I had access to my lab's full suite of hardware for whatever I want. So, I decided to investigate how artificial spiking neural networks would behave given these modifications. There is a nice easy snn trainer out there, but it works using surrogate back propagation which is not biologically plausible at all. So I had to train my own networks. Of course, the learning rules themselves are of interest to me so it wasn't a big imposition, but this has been a very frustrating project and it has cast seed of doubt about the validity of a couple things. Mostly my ability to effectively learn new things but also in the state of computational neuroscience.

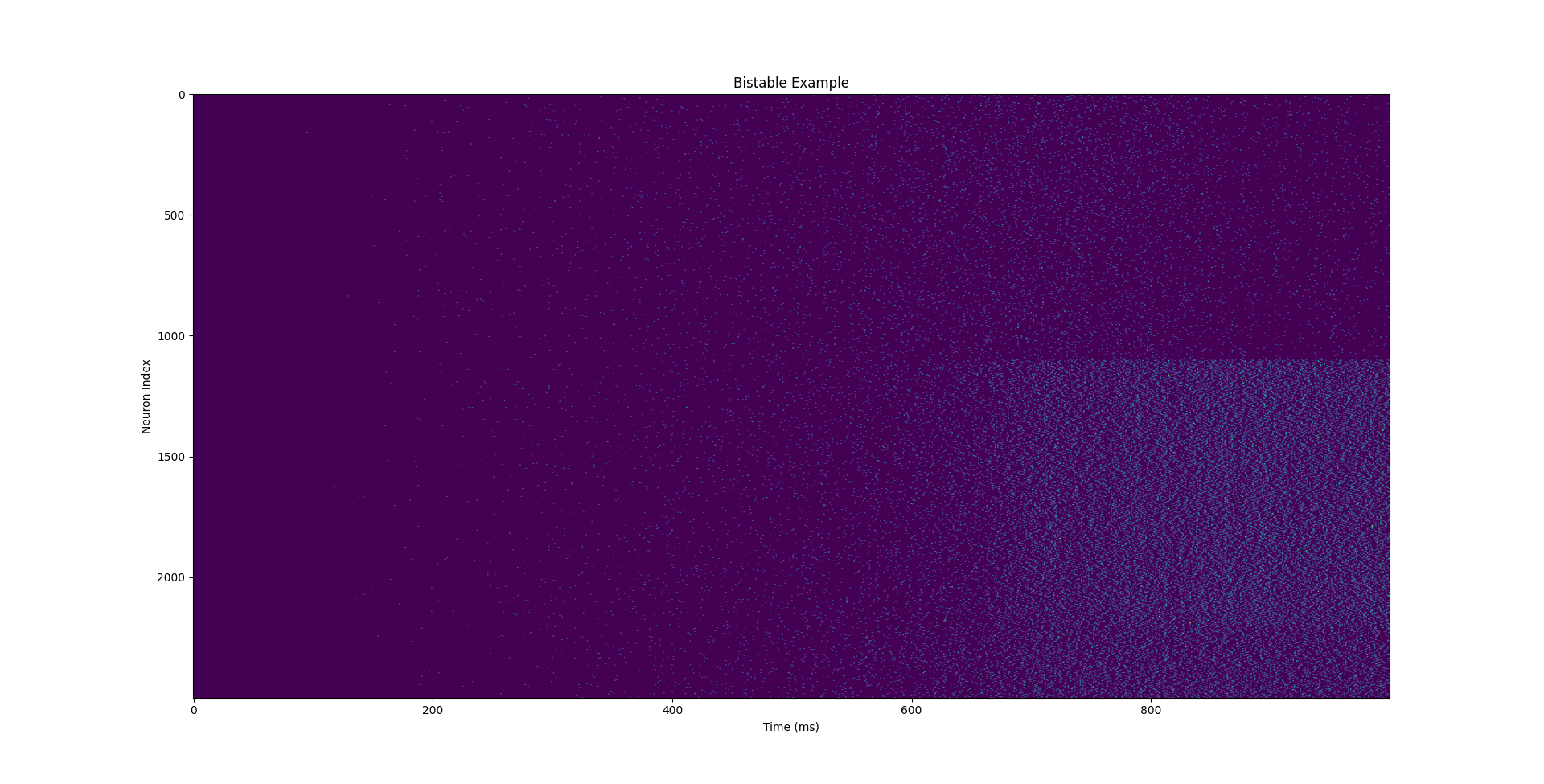

Sorry this is jumpring around a bit but let's focus on the first paper. It's called Probabilistic Decision Making by Slow Reverberation in Cortical Circuits. As sated before, it models decision making with different populations of neurons competing for persistantly elevated activity. A stimulus comes in and acts on two populations equaly. Activity in these populations can further stimulate other neurons in the same region but not in the other. Both populations stimulate a third populations of inhibitory neurons that inhibts both regions equally. After some time, the self stimulus of one population grows to the point where it maintains activity while stimulating the mutual inhibitory population enough to supress almost all activity in the other population. I make it sounds quite simple but this provess is very sensitive to the strength of the input stimulus, the weights connecting each of the regions, and the number of neurons in each region. That said, here is an example image of what the firing rate might look like over time for this simulation:

Oh and here is the code for that. Now, you can see how this would immediately be attractive to me as a method of classifying an input as it has a very natural competition between different classes and a high number of neurons which should allow for interesting feedback to make choices. There are a few problems with this approach. Because of the high feedback, the time between initial stimulus and the choice being made is very short so practically very little information can influence which population is chosen. A 1 second long simulation may have less than 100ms of spiking activity before one population becomes too active to be suppressed if it is the wrong choice. Additionally, adding multiple populations for multiple classes is a challenge. I think I got up to 4 populations all competing but it was not scalable, at least not without a lot of tuning. This also might be come kind of bias or just wrong but my intuition is that this cannot be how the brain makes complicated choices. One population being active at a time seems so inelegant and wasteful of a way of representing information. If you wanted 16 possibilities you would need 16 populations, contrast this with even a simple binary approach, 16 distinct possibilities would only need 4 populations each being on or off. This is to say nothing of the information density of a continuous variable representing activity and/or fuzzy boundaries on each population. As such, I never got this working as a classifier for the MNIST because of these limitations. If I threw myself into it the way I did the next paper maybe I could have but since the original paper didn't even promise that level of capability I think it was the right choice not to pursue it.

So, what about that other paper? This one is called A biologically plausible supervised learning method for spiking neural networks using the symmetric STDP rule and boy is it way more complicated than that last one. The tagline is that they used STDP (kind of) and a homeostatic mechanism to train a neural network to achive high accuracy on the MNIST image recognition dataset. Perfect! That is exactly what I'm looking for, biological neural networks being trained in a biologically plausible way to do something real. Unfortunately, replicating their results was way harder than I thought and the process cast doubt on the biologically plausible part. Don't get me wrong, it's a geat paper and it is biologically plausible, but the process of replicating has raised the bar for what I really want to model myself.

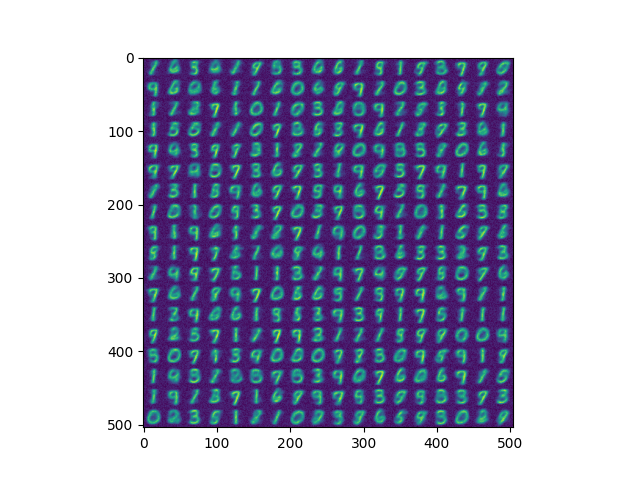

So how does it actually work? There is an input layer that encode the image of MNIST digits into spikes, this is 28x28, same dimension as the image but these are not actually modeled as LIF neurons, they are just spike trains. After that there is a kind of double hidden layer, in mine it is 18x18 excitatory neurons and 18x18 inhibitory neurons. The way this works is kind of silly but I'm not changing it, whenever a given excitatory neuron fires, a corresponding inhibitory neuron also fires and inhibits all the other neurons in the excitatory layer. The excitatory layer is excited by the input. And finally there is an output layer where each neuron corresponds to a given class. The class with the most fires is chosen. Each neuron had a variable threshold, if the neuron was firing too much it would increase and vice versa. The synaptic weights would increase in accordance with what they call a symmetric DA-STDP rule. The regular STDP rule has the ability to depress the synaptic weight if the pre-synaptic neuron fires after the post-synaptic neuron. Their rule still increases, just less. They claim this is biologically plausible and has been observed in vivo. As this can only ever increase synaptic weight, weights are normalized such that the mean weight into a given neuron remains constant, or at least weights are pushed in that direction if they become too high. You're going to have to forgive me for not being detailed about this, their paper is relatively clear and I had to change a good bit of this so my motivation to give the exact equations they used is at an all time low.

So why was this paper so hard to replicate? I'm not really sure. Part of it is that I didn't optimize my code because I kept telling myself that one more experiment would surely do it so it would be a waste to spend time rewriting everything in c++. This attitude made it take hours for each test because I was simulating thousands of seconds for the neural net plus a training rule on top of that. But aside from that, I genuinely don't know what I did wrong. I could do a proper autopsy and compare versions of my code to the code they have published but the whole point is that I wanted to replicated their results by reading their paper. Anyone can copy code, but if I can't build it from scratch I don't understand it. Anyway, my first trial were copying the paper as close as I could. Use the exact LIF model they did, use the same learning rule, batch size, input encoding, everything. The problem is, this doesn't work or at least it didn't for me. I believe they got the results they claimed and I just messed up but it was seriously frustrating to get terrible results (~10% accuracy, same as random chance) after taking pretty detailed notes on how their network worked. After that I decided to explore some other options. I would love to live in a world where I hoped into LaTeX and typed out all my equations nice and need but instead of that I'm just going to say the lines of my code that I'm referencing. Specifically, I modified the learning rules and the input encoding. I didn't touch the architecture or the LIF model really, I did have redundant output neurons but I don't think that mattered much. The learning rule I ended up going with is reminiscent of the original but really quite different. It's kind of a modification of a BCM rule where the change is proportional to the activity of the pre and post synapse but it isn't quite that simple. (Lines 82-102) My rule respects the intuitive difference I would expect for inhibitory neurons, that is a weight should strengthen if an inhibitory neuron is highly active and a post-synaptic neuron isn't. This strengthening was paired with a decay term that would decrease the weight of a synapse in proportion to its distance from some default value. (Line 95) In the final code that default value was just 0 but I imagined that it may be helpful to have the weights not decrease passed some point. As for the adaptive threshold, that was also a simple decay when low activity was present. (Line 189) This is in contrast to the deviation from an average value as original stated in the paper. Input encoding was also changed. They had a simple system where the intensity was converted to a spike train for each pixel, for each image. If too few spikes were detected in the hidden layer, the maximum frequency of the input was increased and the simulation ran again. My method divided the intensity of each pixel by the mean value of all pixels then multiplied by some small constant and then was converted into a spike train. (Line 216) In a practical sense this means each image should send approximately the same number of spikes in a given time even though 1 has far fewer high intensity pixels then 8. I really don't know why this works better for me, all I know is that their method did not work at all every time I tried it. Using all that together, I got 75% accuracy on MNIST, not great but clearly it is learning something. Also, why you do some trickery with the weights going into the hidden neurons you get nice pictures like this:

And what about that frustration I mentioned all that time ago? Well this is really a footnote and I don't want to be beholden to this for all time but...

These papers use slightly different LIF neuron models and what's more, neither of them work when I replace them with the IZH model I have worked with in the past. I can understand why each individual group would want to have their own in house neuron model they can tweak for whatever they're working on, but if your results don't generalize to other neuron models, why should I think they have anything to say about real neurons? Now if you're just working on spiking neural nets for machine learning this is a non issue, your main goal is to make a useful system. But for computational neuroscience, the usefulness of the system is tied to how closely if obeys they rules of real neuroscience. You aren't learning anything about the brain if your neurons don't work right. Maybe I'm being too harsh, maybe this is just user error and I should have tried harder to get different things to work with different neuron models, maybe each of these neuron models correspond to different types of neurons in the brain and they're all equally valid under certain conditions. But whatever the case, it will bias all my further reading on this topic because I'll be thinking 'Sure, this works for their LIF model tuned with their constants, but would it work with HH, IZH, or FHN neurons? Would any of these models be capable of learning with the same learning rules that actually exist for real neurons? Would the learning rules described here work at all if they were implemented on real neurons?'. I think these are important questions and I would love nothing more than for someone to call me an idiot and show me a paper unifying learning rules in real in vivo experiments and spiking neural networks and showing that certain neuron models are too simple or just inaccurate. It's just frustrating to not know, I'm drowning in papers I want to read but I still don't feel like any of them get to the heart of my questions. I feel like my questions are simple basic things I should be able to search for and I can't find them. Am I searching wrong? Am I reading the answers and I'm too stupid to understand? Am I going to find out months from now I've been wasting my time and everything I want to know has been in one textbook nobody has told me about? If nobody knows these things, why aren't they working on them? Sorry, that was just a rant and a stream of consciousness but I'm leaving it in. I plan on making another post with a detailed explanation of my questions hopefully I can send to people. Maybe it will get some answers.